After years of claiming otherwise, Facebook and Twitter have succumbed to partisan pressure and begun openly deciding what is and is not true.

This is the sad result of this week’s dust up over a New York Post story detailing explosive allegations about Vice President Biden and his son, Hunter.

But that blockbuster October surprise was quickly usurped by the reaction to the story by Facebook and Twitter. Facebook, according to Policy Communications Director Andy Stone, is “reducing its distribution on the platform” pending a fact-check. That means Facebook decided the story was false on its own and is treating it like misinformation. It won’t appear in the most prominent places on Facebook’s platform, including users’ News Feed. Facebook has claimed that its misinformation policies reduce a story’s spread by over 80%.

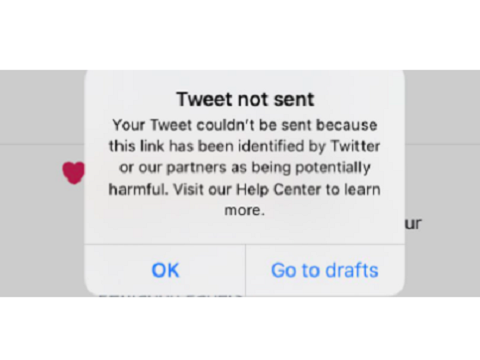

Not to be outdone, Twitter attempted to eliminate the story by banning users from linking to it, suspending a Trump campaign account attempting to share a video referencing the story, and, for a short time, prohibiting the New York Post itself from using its platform. Twitter’s justification was that the story contained “hacked” materials, but this flimsy explanation was clearly pretense. Twitter removed the story because it looked, to them, to be false. (Facing significant backlash, Twitter has retreated from this position.)

No matter how you feel about the Post story or about the social media platforms themselves, this is a sad result.

It is futile, stupid, and wrong for these platforms to deliberately slow the spread of a news story because some unnamed person within the company hierarchy has decided that story is false. That is true of any news story, but it’s particularly the case for one concerning a presidential candidate within weeks of the election.

Let’s start with the futility. Facebook and Twitter, despite their size and popularity, cannot control the news. This story was featured in a major American newspaper. And stories about the story, whether supportive or skeptical, were covered in every other newspaper. Those stories were shareable on Facebook and Twitter. And the most watched news network (Fox News) covered the story in every hour of its programming. The platforms’ lack of control was so obvious that, even after Twitter banned the story, it was, for a time, still trending … on Twitter!

The only thing that Facebook and Twitter managed to do was inject themselves into the story. (This is the stupid.) I don’t know if the social media giants are aware, but there have been some ongoing debates about their role in the American political process. The three biggest accusations levied against them are (in no particular order): they have too much power, they are biased against Republicans, and they’ve destroyed traditional news sources. With a single terrible decision, they’ve appeared to confirm all three accusations.

For those of us that believe the critiques of social media are wildly overblown and often just wrong (whether from left or right), this is particularly disheartening. How do you convince a Republican that Facebook or Twitter are not biased when, in the lead up to the Presidential election, they restricted a story critical of the Democratic nominee? How can you convince a progressive that Big Tech does not have too much power over our lives and does not need to be “broken up” when they blew past their own policies and safeguards to prohibit a newspaper from promoting its own reporting? In both instances, it has become much harder.

With these decisions, the likelihood that not just Twitter and Facebook, but all of the internet will be subjected to a political reckoning has become much greater. Free expression on the internet will suffer from such legislation. So will Facebook and Twitter.

But all of that boils down to a more fundamental point. Social media companies have received near constant pressure to stop misinformation, disinformation, election interference, and fake news. They are haunted by the specter of the 2016 election and the perception (whether fair or not) that lax content moderation policies influenced the result. With laser-like focus on not making those same mistakes again, they’ve decided to make all new ones.

Facebook set up extensive content moderation networks of humans and computers to enforce rulesets so vast no user can possibly be expected to be aware and comply. They partnered with federal governments to proactively tackle foreign operations, and this, in turn, has metastasized into taking down “inauthentic” domestic operations. They’ve contracted with a network of international fact-checkers to police misinformation. They’ve created ad policies so strict that, in the final week before the election, you are banned from running new political ads. Twitter has its own insipid flavor of all these same policies.

But they’ve forgotten what they are: a place for people to talk to each other. Companies founded in the American spirit of free expression intent on allowing that free expression to expand and flourish the world over.

Somewhere in the morass of bureaucracy these companies created, this New York Post story tripped a switch that made them act hastily. Perhaps their national security contacts said, “be on the lookout for a false Biden story.” Perhaps people sharing a lot of New York Post content also share a lot of fake news, making the algorithm discount the validity of Post stories. Or maybe a few employees just didn’t like the reporting. If the companies, however, behaved with their overarching strengths and values in mind (at least the ones they allege to have), they would not have acted. And that lack of respect for expression in all of their efforts has cost them here.

This is not to say that social media companies shouldn’t moderate content. They should! But they should do so with an eye toward their own goal and their own strengths – dialogue, conservation, and expression. That means you shut down vitriolic hate because it shuts down conversations. You block pornography because it’s not something people want to talk about with their friends and family. You stop harassment because it drives people off your platform.

But it is wrong to block a news story about a major party presidential candidate because during election season that’s what everyone wants to talk about.

Social media companies (and often their critics too) act like incendiary political speech that may be false or misleading is a brand-new phenomenon. And that close to an election, voters might hear some news about the candidates that isn’t true. These are age old problems in a democracy. Facebook and Twitter cannot solve them with a half-baked update to their Community Standards or post-hoc justification for their actions.

Which is why it is wrong to try. Let news organizations debunk bad reports. Let politicians defend themselves against false accusations. Let voters make decisions about the credibility of sources and candidates.

None of these solutions are perfect, but all are better than the faceless judgement of Facebook and Twitter.